Continuous Delivery and Different Infrastructure Virtualization Options

Those requirements point to two possible options, use VMware ESXI / virtual machines or docker, a container technology, or both.

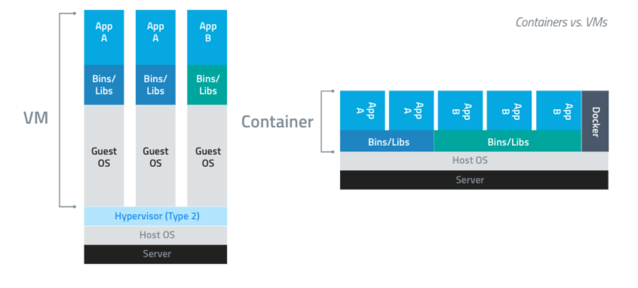

Docker containers provide OS-level process isolation, whereas virtual machines offer isolation at the hardware abstraction layer (i.e., hardware virtualization). In a modular web application, we can use machine virtualization to deploy into different environments (development, stage, production, etc.), while containers are best suited for packaging/shipping portable and modular software. Again, the two technologies can be used in conjunction with each other for added benefits—for example; Docker containers can be created inside VMs to make a solution ultra-portable.

This can be adapted to have a Continuous Delivery infrastructure.

VMWARE Hypervisors

vSphere is VMware’s flagship virtualization suite consisting of a myriad of tools and services such as ESXi, vCenter Server, vSphere Client, VMFS, SDKs and more. The suite functions as a cloud computing virtualization OS of sorts, proving a virtual operating platform to guest operating systems such as Windows, *nix, and so forth.

At the heart of the vSphere suite is VMware ESXi 5.5 to 6.7: the leading hypervisor technology that makes hardware virtualization possible can be installed on most CPU Intel/AMD 64bit architecture. Hypervisors allow for multiple operating systems to live on a single host with their own set of dedicated resources, so each guest OS appears to have CPU, memory, and other system resources dedicated to its use. ESXi runs directly on bare-metal server hardware—no pre-existing underlying operating system is required. Once installed, it creates and runs its microkernel consisting of 3 interfaces:

-

Hardware

-

Guest system

-

Console operating system/service console

Though an early virtualization pioneer, VMware is not the only show in town anymore: Microsoft Hyper-V, Citrix XenServer, and Oracle VirtualBox are also popular hypervisor technologies.

DOCKER / Kubernetes Containers

The Docker project’s primary intent is to allow developers to create, deploy, and run applications easier using containers. Clearly - for DevOps and CI/CD initiatives - application portability and consistency are crucial needs that Docker fulfils quite nicely. Containers make it possible to bundle an application up with all the required libraries, dependencies, and resources for easy deployment. By using Linux kernel features such as namespacing and control groups to create containers on top of the host OS, application deployment can be automated and streamlined from development to production.

For both developers and operators, Docker offers the following high-level benefits, among others:

-

Deployment Speed/Agility – Docker containers house the minimal requirements for running the application, enabling quick and lightweight deployment.

-

Portability – Because containers are mostly independent self-sufficient application bundles, they can be run across machines without compatibility issues.

-

Reuse – Containers can be versioned, archived, shared, and used for rolling back previous versions of an application. Platform configurations can mainly be managed as code.

Continuous delivery is all about reducing risk and delivering value faster by producing reliable software in short iterations. As Martin Fowler says, you do continuous delivery if:

-

Your software is deployable throughout its lifecycle.

-

Your team prioritises keeping the software deployable over working on new features.

-

Anybody can get fast, automated feedback on the production readiness of their systems any time somebody makes a change to them.

-

You can perform push-button deployment of any version of the software to any environment on demand.

Containerization of software allows us to further improve on this process. The most significant improvements are in speed and the level of abstraction used as a cornerstone for further innovations in this field.

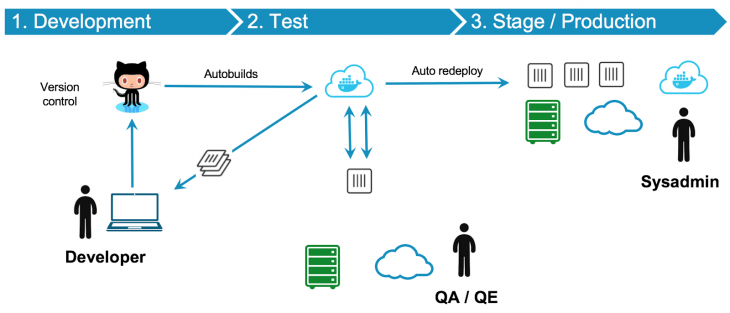

The continuous integration setup consists of:

-

running unit tests immediately after each build

-

building the Docker image that we use to develop our service

-

running the build container and compiling our service

-

creating the Docker image that we run and deploy

-

pushing the final image to a Docker registry

-

pull from the Docker registry to automatically deploy the last release on different servers (development, stage, production)

Kubernetes with pods allow a similar workflow as described for Docker. Kubernetes is more advanced and requires more ad hoc configuration than Docker.

Both technologies and recently also VM vRealize Orchestrator allow to have “elastic property” and dynamically auto-scale the cloud platform. That means when the application is running on N machines the workload can dynamically adapt to new demands by growing to N + M machine or shrinking to L < N machine, by following the number of clients and business requirements of a new digital product. Scaling Up and Down is done in different ways.

Horizontal-scaling or scale dynamically is quite easy as you can add more machines into the existing pool. Vertical-scaling on the contrary is often limited to the capacity of a single machine. Scaling beyond that capacity results in downtime and comes with an upper limit. For autoscale to work properly, it has to be configured before hand and have some machine idle that can be aggregated in the workload pool and activated when a workload threshold is reached.

Docker and VMs enable developers to follow the TDD (Test-Driven Development), DDD (Domain-Driven Development) and Continuous Delivery Agile methodology allowing to create digital products in a more efficient, scalable and testable way. Using Docker and VMs we also remove the possibility that the developer computer environment (DEV) and deployment environment (PROD) are not 100% the same that would cause issues during deployment. And lastly, the company can save costs on buying new hardware, when deploying on Azure, AWS or on the cloud is usually cheaper and increase the reliability of the final digital product or service.

If you would like to learn further on this topic, please go to the following links:

https://martinfowler.com/bliki/ContinuousDelivery.html

https://techbeacon.com/enterprise-it/scaling-containers-essential-guide-container-clusters

Check out this video if you want to have a quick overview on the difference between containerization and virtual machines: